As storytellers all around the world will tell you, an effective story is one that can resonate with the audience in a way that it stays with them long after your story has been told.

But what separates a good story from a bad one? An effective one from a lackluster one? Depending on who you ask, you shall receive differing answers. For me and the talented team at Project Hindsight, it boils down to one word: limitations.

Limitations come in many shapes and sizes. Some may hinder us as storytellers, whilst others empower us. For Project Hindsight, a project that was successfully pitched by myself and a few of my peers at Carnegie Mellon University’s Entertainment Technology Center, we choose to view them as an opportunity.

But wait, what is Project Hindsight?

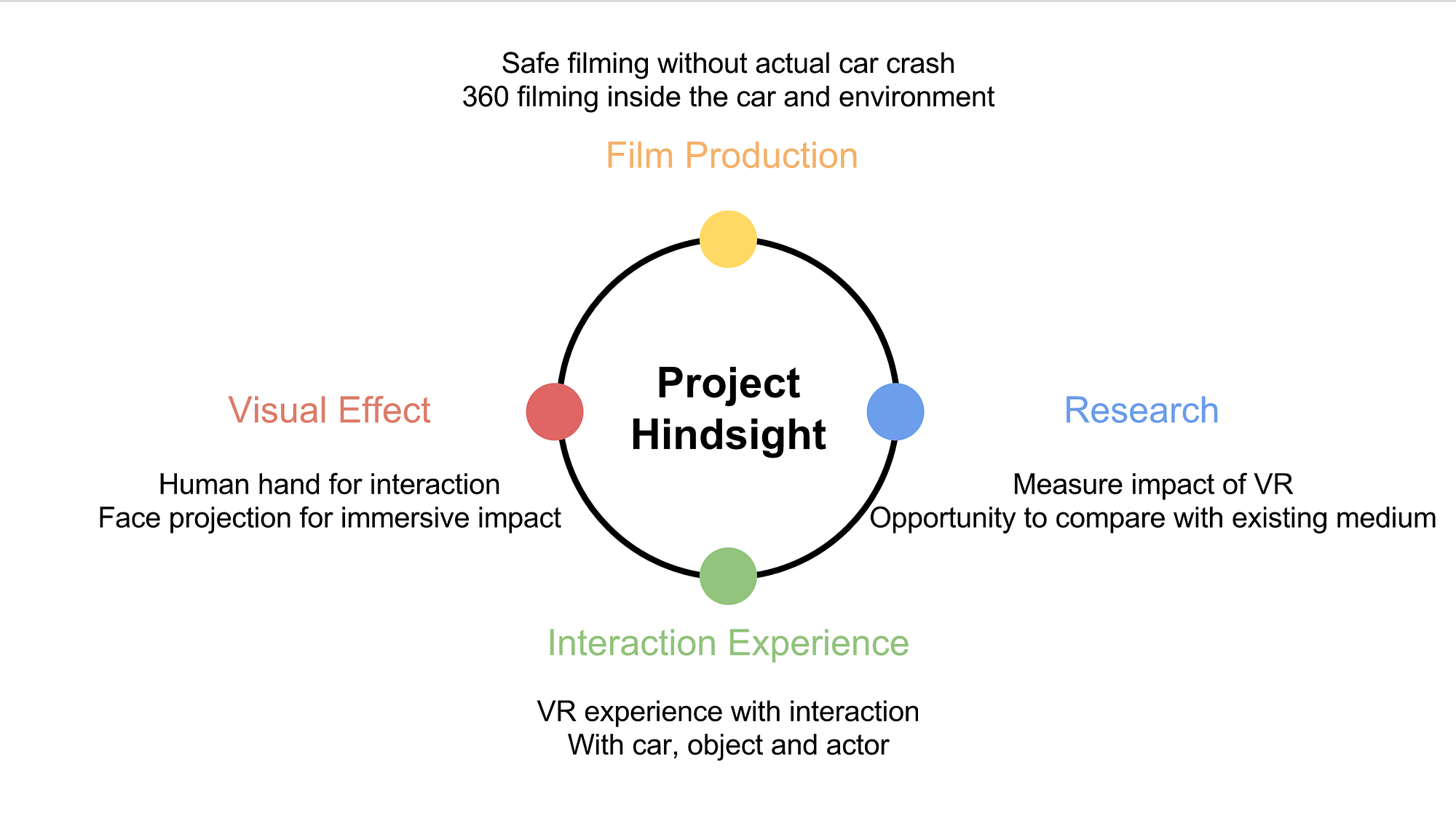

Project Hindsight is an interactive, virtual reality live-action experience that aims to explore the consequences of unsafe driving practices via an engaging and emotional story. We aim to explore the effectiveness of delivering such a story and message in a virtual reality environment.

We believe that the nature of virtual reality itself is something that sets Project Hindsight apart from other people who have tried to tackle the issue of unsafe driving practices. There have been radio spots, billboard ads, digital films, newspaper campaigns…but the one thing that separates Project Hindsight is immersion. We are hoping to deliver an emotional story in the most immersive environment possible in order to affect change.

However, there are limitations in telling a story using live-action in Virtual Reality that we as a team are very mindful of, and here in this blog post I will attempt to outline the few major questions and challenges that we are hoping to take on with Project Hindsight.

Transitions within a live-action setting

My team and I feel that one of the key tenets of our project is telling an interactive story. However, since we are doing this in live-action, one of our main challenges becomes about how transitions — changes in the scene due to interaction by the viewer — occurs within our experience. This is not a challenge that is unique to us, but to the entire live-action virtual reality industry as a whole.

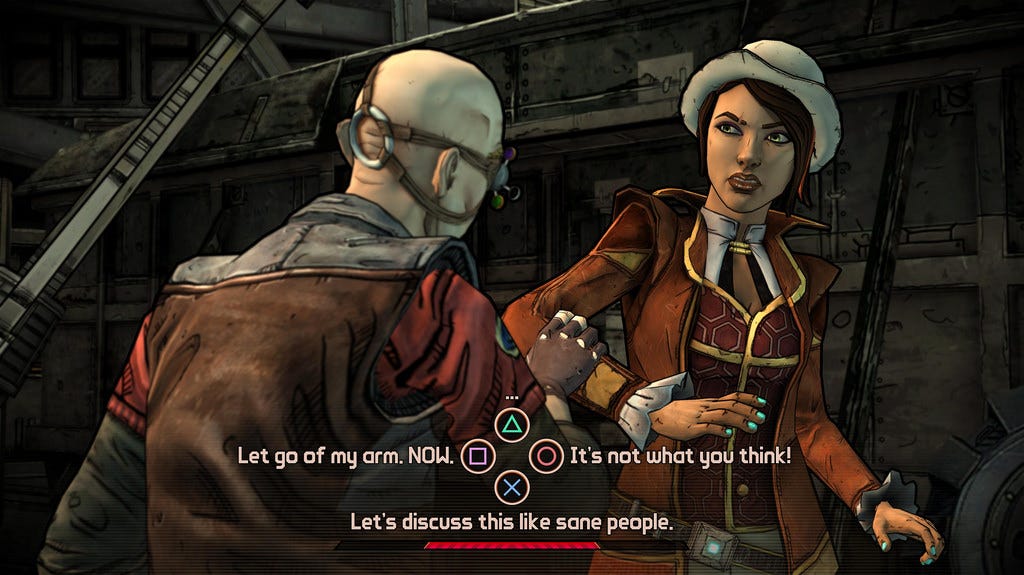

Currently, for an interaction to drive the story, we see two types of ways those transitions are handled. The first is freeze frame, wherein the scene freezes while the viewer is presented with choices that they can make. This works especially well for transformational experiences that want you to go through introspection and self-reflection as you make a choice. The second is by putting the scene in an animation loop while the player decides on what to do next. A prime example of this is any game belonging to the catalog of Telltale Games.

Interactions within a live-action setting with CG objects

One of our stretch goals for this project is to explore the possibility of understanding how realistic interactions can happen with CG objects within a live-action environment in virtual reality. One of the hurdles the industry faces today is how to map 3D objects onto a 2D plane. Why is this important to our project? Since film is seen on a flat 2D plane, creating interactive and realistic CG objects which are three dimensional is difficult.

This challenge does not extend beyond live-action films in virtual reality. For instance, a game created for VR will be built as a 3D world, where 3D objects and their interactions work easily from a technical standpoint. However, since film exists on a 2D plane, creating a solution for interacting with realistic 3D objects will be one of the biggest challenges we are attempting to overcome with our project.

As mentioned, this is a stretch goal — we would like to be at the forefront of the technological revolution we are seeing with the advancement in VR capabilities. However, we have not put all our eggs in one basket. We as a team know that we will need alternative solutions in place that will not impact timelines and deliverables in any significant way if our research into this solution has not coalesced into one that works for Project Hindsight. Yet, we are exciting about taking on such a heady challenge and will nonetheless share our findings into this via this blog and hopefully other avenues in the future.

Telling a story that resonates in Virtual Reality

Our goal of crafting a a compelling experience rests on the way we approach emotional storytelling within Virtual Reality while recognizing the limitations that this platform brings for us at the same time.

One of the major difficulties that comes with trying to tell an emotionally complex story that forces the viewer to ask questions to themselves is the “wow” factor that comes with Virtual Reality.

Watching a film in a cinema hall is familiar. Playing a video game on your television is familiar. However, wearing a VR headset and being a part of an experience that uses film and interaction is not familiar. Virtual Reality is still a budding industry, one that has not yet been embraced on a large scale. Due to this, the novelty factor of wearing a VR headset — the “wow” factor — inherently overpowers many other emotions or emotional arcs that creators want their audience to feel.

So the challenge here is about staying true to one of our core tenets of delivering an emotionally engaging story while immersing the audience in the world of Project Hindsight. By indirectly controlling their experience using visual and audio cues, by engaging dialogue, by immersive settings, we hope to reach the audience while respecting our duty as storytellers. Finding the right balance between story and interaction in a way that the “wow” factor of being in a VR world does not overpower the story we are trying to get across is something the team is aware of. We hope that by being self-aware about this, we can create a story that resonates with just about anyone who decides to experience Project Hindsight.