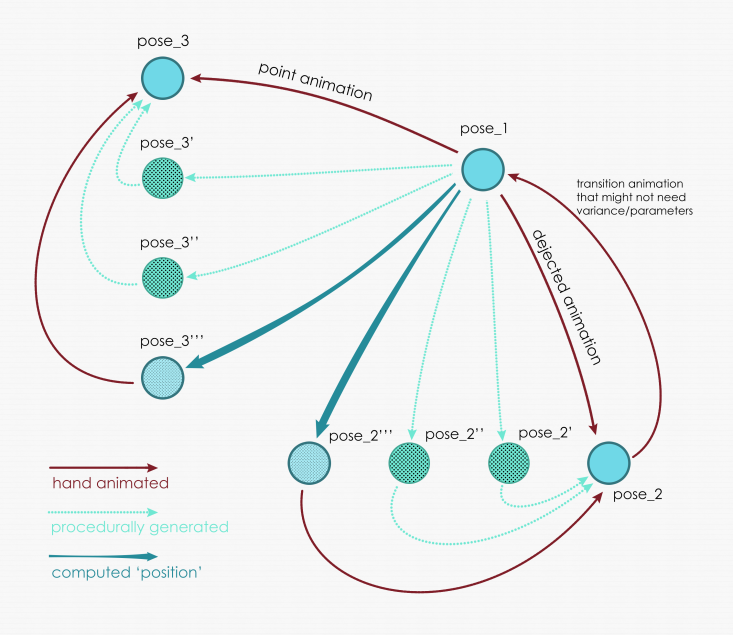

The straightforward way to design an animation system for HERB is to use a fixed pose-to-pose design as illustrated by the following image.

The disadvantages of this approach are:

a. It’s a fixed graph so in order to replay a sequence, you have to go back through a set of unwanted poses. Additionally, the graph would have to be huge to support every line of the play because everything is hand animated, this is confusing for the operator as it is not easy to visually parse.

b. If the director wants to see a different version of an animation/pose for example something faster or slower, this doesn’t provide that functionality

c. It forces the director to adapt to the tech rather than a more user-centric approach

We tried to look for another solution that can solve at least some of these problems. First we focus on flexibility; based on research we did (specifically the perception research I posted about before and further, The Effect of Posture and Dynamics on the Perception of Emotion) we came up with the idea of parameterized animations. The design of the workflow is to hand animate a gesture/action and then use a Blender script I have written to generate parameterized versions of that main action.

The two parameters we came up with based on the research paper are ‘openness’ and ‘intensity.’ Intensity is basically the sped of the animation and this is based on the paper’s findings that altering the speed alters the intensity of perceived emotion. So for example

We define openness as the amplitude of the joint angles or the distance of the limb from the body. This is also based on the findings of the paper that indicate that blending a motion with a more neutral motion and thus altering the pose changes the perceived emotion. Here is an animated example of this concept as well.

Less Open

More Open

Now we define an animation set as consisting of the main original gesture plus say n1 less open versions and n2 slower versions of these (n1 +1) animations so a total of n2(n1+1). We hand animate the most extreme, the upper limit of the physical capabilities of the robot i.e. the fastest and most open joint angles so that we only have to test that version for collisions and velocity limits.

Ideally we would want any animation to be able to lead into any other animation to provide maximum flexibility and stay away from the closed graph problems but this is not really technically feasible because

a. It would require us to programmatically generate every transition between n animation sets which is an n² problem

b.There’s no guarantee these transitions would not cause velocity faults or collisions so those would have to be hand checked

c.A large database of animations would have to be stored, all of which may not even be useful as we may not even transition between certain animations ever

So we came up with a middle ground solution, which is not perfect, but a step toward making something that eliminates at some of the problems. We design a pose-to-pose graph but we also have parameters for each pose which we generate as explained. To solve the problem of exploding the graph with too many transitions, we want to have hidden transitions from the parameterized version of the pose back to the original. This way we have some flexibility but are also trying to work within the technical limitations.